Technology

Ceph delivers object, block, and file storage in a single, unified system.

Ceph is highly reliable, easy to manage, and free. Ceph delivers extraordinary scalability: thousands of clients accessing exabytes of data.

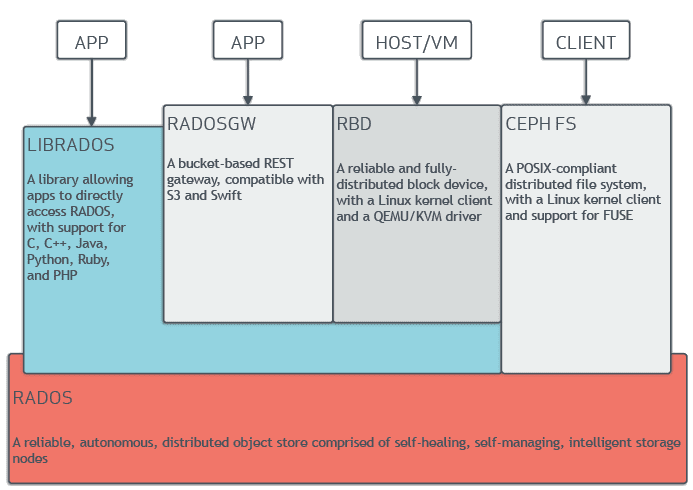

The Ceph stack: architectural overview

The RADOS-based Ceph Stack

Whatever delivery framework you require, Ceph can be adapted and applied accordingly. Ceph provides a flexible, scalable, reliable and intelligently distributed solution for data storage, built on the unifying foundation of RADOS (Reliable Autonomic Distributed Object Store). By manipulating all storage as objects within RADOS, Ceph is able to easily distribute data throughout a cluster, even for block and file storage types.

Ceph's core architecture achieves this by layering RGW (RADOS Gateway), RBD (RADOS Block Device) and CephFS (a POSIX-compliant file system) atop RADOS, along with a set of application libraries in the form of LIBRADOS for direct application connectivity.

Object storage

The Ceph RGW object storage service provides industry-leading S3 API compatibility with a robust set of security, tiering, and interoperability features. Applications which use S3 or Swift object storage can take advantage of Ceph's scalability and performance within a single data center, or federate multiple Ceph clusters across the globe to create a global storage namespace with an extensive set of replication, migration, and other data services.

Rich metadata attributes

Make use of object storage's rich metadata attributes in Ceph for super-efficient unstructured data storage and retrieval:

- Create large scale data repositories that are easy to search and curate.

- Use cloud tiering to keep frequently accessed data in your cluster, and shift less frequently used data elsewhere.

Transparent cache tiering

Use your ultra-fast solid state drives as your cache tier, and economical hard disk drives as your storage tier, achievable natively in Ceph.

- Set up a backing storage pool, a cache pool, then set up your failure domains via CRUSH rules.

- Combine cache tiering with erasure coding for even more economical data storage.

Block storage

Ceph RBD (RADOS Block Device) block storage stripes virtual disks over objects within a Ceph storage cluster, distributing data and workload across all available devices for extreme scalability and performance. RBD disk images are thinly provisioned, support both read-only snapshots and writable clones, and can be asynchronously mirrored to remote Ceph clusters in other data centers for disaster recovery or backup, making Ceph RBD the leading choice for block storage in public/private cloud and virtualization environments.

Provision a fully integrated block storage infrastructure

Enjoy all the features and benefits of a conventional Storage Area Network using Ceph's iSCSI Gateway, which presents a highly available iSCSI target which exports RBD images as SCSI disks.

iSCSI overviewIntegrate with Kubernetes

Dynamically provision RBD images to back Kubernetes volumes, mapping the RBD images as block devices. Because Ceph ultimately stores block devices as objects striped across its cluster, you'll get better performance out of them than with a standalone server!

Kubernetes and RBD documentationCreate cluster snapshots with RBD

Take a look at the links below for more details on working with snapshots using Ceph RBD.

File system

The Ceph File System (CephFS) is a robust, fully-featured POSIX-compliant distributed filesystem as a service with snapshots, quotas, and multi-cluster mirroring capabilities. CephFS files are striped across objects stored by Ceph for extreme scale and performance. Linux systems can mount CephFS filesystems natively, via a FUSE-based client, or via an NFSv4 gateway.

File storage that scales

- File metadata is stored in a separate RADOS pool from file data and served via a resizable cluster of Metadata Servers (MDS), which can scale as needed to support metadata workloads.

- Because file system clients access RADOS directly for reading and writing file data blocks, workloads can scale linearly with the size of the underlying RADOS object store, avoiding the need for a gateway or broker mediating data I/O for clients.

Export to NFS

CephFS namespaces can be exported over NFS protocol using the NFS-Ganesha NFS server. It's possible to run multiple NFS with RGW, exporting the same or different resources from the cluster.

CRUSH Algorithm

The CRUSH (Controlled Replication Under Scalable Hashing) algorithm keeps organizations’ data safe and storage scalable through automatic replication. Using the CRUSH algorithm, Ceph clients and Ceph OSD daemons are able to track the location of storage objects, avoiding the problems inherent to architectures dependent upon central lookup tables.

Introduction to the CRUSH algorithmReliable Autonomic Distributed Object Store (RADOS)

RADOS (Reliable Autonomic Distributed Object Store) seeks to leverage device intelligence to distribute the complexity surrounding consistent data access, redundant storage, failure detection, and failure recovery in clusters consisting of many thousands of storage devices. RADOS is designed for storage systems at the petabyte scale: such systems are necessarily dynamic as they incrementally grow and contract with the deployment of new storage and the decommissioning of old devices. RADOS ensures that there is a consistent view of the data distribution while maintaining consistent read and write access to data objects.

Discover more

Benefits

Ceph can be relied upon for reliable data backups, flexible storage options and rapid scalability. With Ceph, your organization can boost its data-driven decision making, minimize storage costs, and build durable, resilient clusters.

Learn more about the benefits of CephUse cases

Businesses, academic institutions, global organizations and more can streamline their data storage, achieve reliability and scale to the exabyte level with Ceph.

See how Ceph can be usedCase studies

Ceph can run on a vast range of commodity hardware, and can be tailored to provide highly specific, highly efficient unified storage, fine-tuned to your exact needs.

Examples of Ceph in action